In Fact, Retrieval Augmented Generation Is Also Not Enough to Meet User Requirements in Healthcare.

Because Sometimes You Want Exhaustive Answers

In the previous blog, we discussed how LLMs by themselves are insufficient for satisfying user requirements in healthcare. We suggested, at the minimum, we needed frameworks like RAG to provide a natural language interface for retrieval-oriented tasks in healthcare. We also discussed ways to mitigate hallucinations in retrieval-oriented applications in healthcare where there is ground truth like data in the EHR system. However, dealing with hallucinations only aims to ensure that the assertions in the text generated by the LLM are true. We have two additional requirements from healthcare users. These are: exhaustive enumeration, and semantics of not finding anything. Let us look at each in turn and see why these requirements cannot be met reliably even with RAG like frameworks.

Exhaustive Enumeration

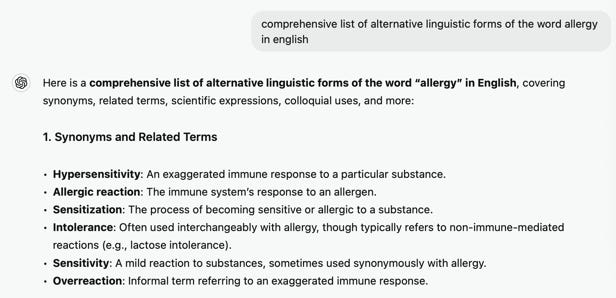

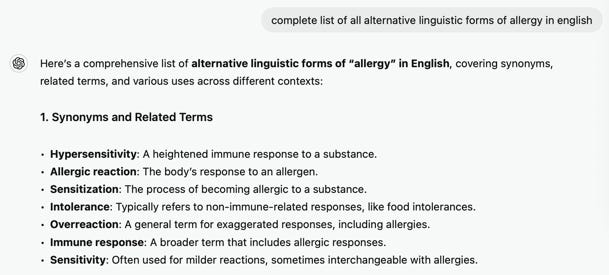

The only difference between the two queries is in the first word of the query. What is amazing is that the output is almost identical, but one response has an immune response as a separate item, whereas the other does not. There were other interactions where the LLM itself acknowledged that getting a comprehensive list is difficult. Now, I don’t know whether either of these two responses is the actual complete list of synonyms and related terms for allergy. Either answer is likely sufficient to get a passing grade in an English test, but the point I want to make is that when the impact of errors of omission can be large, we cannot rely on just the LLM to produce the required output.

Semantics of not finding anything

Q: Has patient had TB

A: The context does not mention TB or its onset date.

Ignore the specific words in the response for now. If the patient record, and I am including the documents about patients from other organizations available as CCDA docs in this definition, do have some mention of TB, especially that they had an allergic reaction to the TB test, we need to return that info. It may be that the physician may order a TB test and knowing that the patient had an allergic reaction to it sometime in the past is important. The point we want to make is that it does not matter to our users if the RAG pipeline does not have information about the query in its context. In our situation from a user’s perspective, we are searching through all the data for the patient. When the RAG pipeline says it couldn’t find anything related to the query, we need to make sure it is because there is nothing to find in the universe of discourse from the user’s point of view.

One may argue this problem is because the retriever is not good enough or it occurs when we must prune what appears in the context of the LLM as part of the RAG pipeline. If we were able to put the entire health record of the patient into the context of the LLM, this may go away. However, putting information irrelevant to the query in the RAG context can also give us poor quality results. So, this is not an easy problem to solve. There is also the consideration of cost and performance overhead of a model with a very large context window. We have chosen to work with cheaper models with smaller context windows. We must do additional work to ensure that when it finds nothing relevant to the query, it is because there is nothing to be found. From an engineering perspective, context windows are like RAM for LLMs in the sense that it is good practice to use only as much as necessary for the problem at hand.

The two requirements above are reasonable requirements for any information retrieval system. In database systems, for example, both these problems are solved by design. There is a formal meaning for every query, and the runtime guarantees that it computes what the query intended. Net-net, when you run a query against a database, you are guaranteed to get *all* the rows that satisfy the query, and if you get nothing back, it is because there were *no* rows that satisfied the conditions in the query. However, the downside is that the queries must be in this formal language, SQL and all the data must be in the relational form. On the face of it, using a model to go from text to SQL like language is the answer, but it is not. The reason is that our users don’t need the full power of SQL to answer their natural language queries. Not to mention going from text to SQL reliably, even with fine-tuned LLMs is still very hard. We do need to detect that they want an exhaustive enumeration of something and pick up all the information we need to run a SQL-like query to produce the result from the user’s input. The store we query is a FHIR store with a different protocol and language for querying, not SQL.

Summary

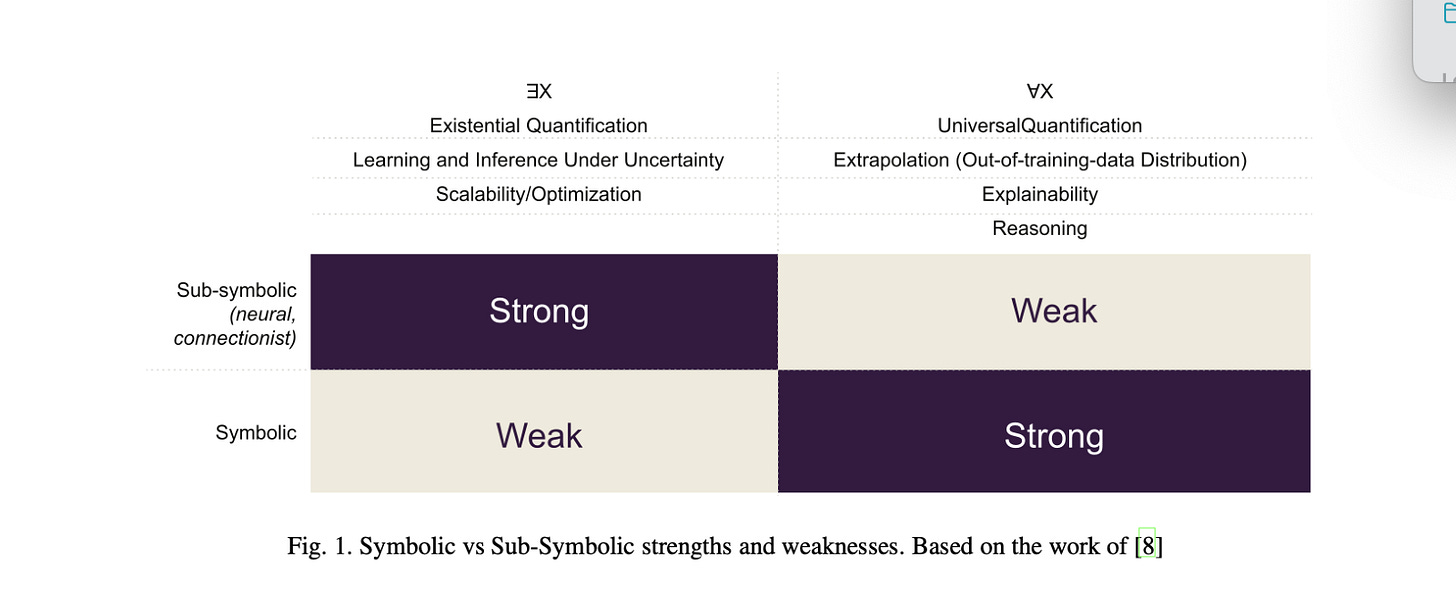

A simple way to understand the relative strengths and weaknesses of neural and symbolic systems is the diagram above from the following paper: Is Neuro-Symbolic AI Meeting its Promise in Natural Language Processing? A Structured Review.

Net-net, for retrieval use cases in healthcare, we need to build hybrid neuro-symbolic systems. We are building such a solution at ThetaRho motivated directly by what our users want because grounding LLM output, exhaustive enumeration, and semantics of returning nothing all need a jump to formal techniques. This must happen automatically depending on context. In the next blog, we will zoom out to talk about why it is hard to measure ROI of AI solutions in general and GenAI applications in particular.

Call to Action

Our journey has just begun. There is a fair amount of AI design, application logic development, operations hardening, and persistent testing and validation to be done to make the output of GenAI usable. But we are well on our way with established beta deployments.

We are seeking a few physician groups that use Athenahealth to help finalize the product. To learn more, please visit ThetaRho.ai and sign up.

Spend less time in the EHR so you can spend more time taking care of your patients, your family, and yourself.

Leave a Reply