Why ROI for GenAI is Hard: Part 1

It Is Easy to Over-Estimate Benefits

Over the last few articles on this blog, we have mainly focused on why raw pieces of GenAI technology, may it be just LLM models or frameworks like RAG, may not be sufficient for solving problems users face in healthcare. For the next couple of blogs, we focus our attention on some challenges in estimating and realizing ROI for AI solutions, especially GenAI solutions in the Healthcare industry. While the ROI question has been recently raised in the context of GenAI solutions, specifically because of the immense investments being made, we will make the case in this blog that some of the considerations are not new and exist for AI solutions that are not GenAI solutions. We will first consider why it is hard to measure the benefit of GenAI solutions, and in subsequent blogs talk about why it is easy to hard to know how much effort is needed to get the benefit, and finally what can be done about it. However, to begin, lets quickly recap what has happened over the last 2 years.

Historical Recap

Even before ChatGPT and LLMs became entered the popular vernacular almost two years ago in Q4 2022, AI was adoption was already picking up. AI was expected to be the main driver of digital transformation that was supposed to drive the next wave of investments in enterprise IT. As a result, according to IDC, most enterprise software providers were expected to insert AI capabilities within their offerings by 2025. I should know because my team at ServiceNow was doing this for ServiceNow at that time. Macro economists had started predicting that AI will eventually cause productivity improvements in the overall economy after decades of relative stagnation in total factor productivity, with the exception of 2021. However, AI projects were failing at an alarmingly high rate, 85% according to Gartner. Then ChatGPT happened. What has followed is two years of intense investment, dozens of groundbreaking innovations. So many groundbreaking innovations that as an engineer, I used to joke that if the ground breaks this often, we are left with quicksand. Net-net, AI had an ROI problem before GenAI, and nothing in the discourse about GenAI has addressed the reasons why ROI in AI let alone GenAI is tough.

Current Arguments about GenAI ROI

David Cahn of Sequoia Capital wrote AI’s 600B Question in June 2024, where he pointed out that it was AI’s $200B question as recently as September 2023. Net-net, his point was that the investment gorging in AI will likely cause some indigestion in the short term but is good in the long term. Financial services firms like Goldman Sachs and financial news organizations like the Wall Street Journal have also weighed in on the question of the missing ROI of GenAI. Partly because macro economists like Daren Acemoglu of MIT now contend that the productivity effects of GenAI are likely to be much *smaller* than expected. Daren contends that if productivity gains from AI are at the task-level, then by estimating what fraction of tasks are impacted and average cost-savings per task, the macro-economic effects of GenAI add up to about 0.66% improvement in total factor productivity over 10 years, and even that can be a material over-estimate. On the other hand, McKinsey reports that suggest a $4.4T per year impact on the global economy and company profits. But these McKinsey reports make the point I want us to focus on. World GDP in 2024 is expected to be around $110T, which would mean GenAI will add 4% to world GDP. Corporate profits are likely to be less than $10T worldwide, so according to McKinsey corporate profits will jump almost 50% because of GenAI. Which one is it? It cannot be both. This highlights the fundamental problem; measuring the benefit of GenAI, or any AI is fraught with errors. In theory, it should be easier to measure the impact on the macro-scale, but most organizations implementing AI or GenAI don’t care about impact on the overall economy, they care about benefit to their top-line or bottom-line.

It is hard to measure Value of GenAI

There are multiple reasons why it is hard to measure impact of AI. The first reason is because its impact depends on the solution it is embedded. AI for different kinds of solutions ranging from “experience” (natural language interface), “automation” (RPA, process automation), security/ops (anomaly detection) and “forecasting”, etc., etc. Each of these solutions, and this list is by no means exhaustive, have different value/feasibility/AI quality/effort tradeoffs. At the top level, the value of any AI solution depends on at least three dimensions. In each dimension, the as-is state before “GenAI” and the to-be stage after “GenAI” must both be estimated to estimate value of AI and the transformation, and this is not easy.

-

Data: Most organizations don’t know if they have enough quality data for the business problem at hand and what sort of effort is needed to create the data. (Labeling, cleaning data, publicly available data, generated data, etc.) Ensuring that one has quality, representative data with provenance is a critical starting point for AI.

-

Workflow: Most organizations, even those that have digitized and automated various workflows do not know the impact of AI on these workflows. The value is easier to measure in the aggregate, mistakes are often felt by users locally, meaning prediction by prediction. Therefore, to extract value from AI, we should also consider some of the following. By no means easy.

-

What is the cost of getting a “wrong prediction”? This may not be uniform across mistakes, and relying only on “theoretical” model quality metrics is wrong.

-

When will the application know that a particular inference call returned the “wrong” prediction? Can the application logic recover from it, if so, how?

-

-

User experience: Many AI projects fail even when the data and workflows elements are taken care of. What remains is managing the change in the user expectations and building trust with users to ensure adoption.

-

This may be in the form of starting with a recommendation/suggestion or completely embedding the user experience with the application and “hiding” the AI from the user.

-

Providing ways to gradually expand the set of users who are exposed to AI so that the experience can be made predictable before adoption at scale.

-

Healthcare specific challenges

-

Data: As an industry, healthcare generates 30% of all the data generated in the world. However, 97% of data generated in a hospital is never used. Current EHR systems, that are the dominant system-of-record in hospitals, the data model is optimized for billing and can be very fragmented for patient care, from a user perspective even within a single organization. However, as an industry, it tends to be a late adopter when it comes to adopting new technologies because of the perceived complexity of complying with regulatory requirements in the industry. This means that most organizations do not have a handle on data under their management making it difficult to baseline current state and estimate effectiveness of AI solutions.

-

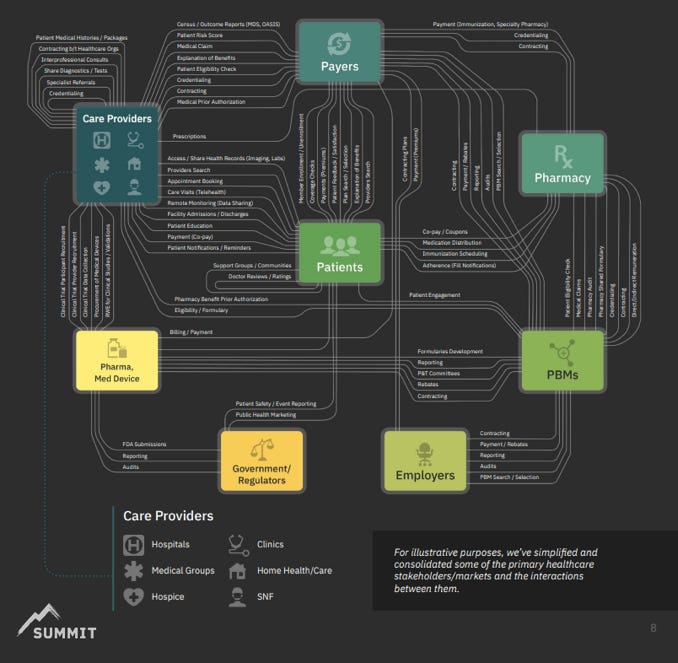

Workflow: Every workflow in healthcare is complex, especially workflows that cut across organizations as seen in figure below. Most workflows, even administrative workflows, in healthcare provider organizations are still manual, probably because they need to adapt to personal differences across patients. AI has the potential to improve these administrative workflows, but the value depends on the workflow. Again, the lack of uniformity and consistent metrics for efficacy of workflows means estimating the impact and benefit of AI is difficult.

-

User experience: Healthcare users are a very diverse lot with very different requirements from similar applications. For example, the requirements from an EHR system for a primary care doctor are very different from an anesthesiologist or a cardiologist. Not to mention a physician versus a nurse practitioner versus an office manager or a billing or coding person. This makes designing such systems to get adoption across the user base particularly challenging. In addition, if we want to target researchers or patients, the hurdle is different.

Therefore, there are fundamental hurdles to overcome to estimate the value of AI reliably in healthcare. In the next blog, we will see some challenges in estimating the cost or effort in getting to value. Therefore, estimating both value and effort are difficult to measure making ROI almost impossible to measure. Finally, we will see what we can do to address these challenges and what we are doing in ThetaRho to overcome these challenges.

Call to Action

At ThetaRho, our goal is to provide physicians with the patient information they need to practice medicine with fewer clicks, allowing them to focus on their patients' needs.

Our journey has just begun. There is a fair amount of AI design, application logic development, operations hardening, and persistent testing and validation to be done to make the output of GenAI usable. But we are well on our way with established beta deployments.

We are seeking a few physician groups that use Athenahealth to help finalize the product. To learn more, please visit ThetaRho.ai and sign up. (hyperlink)

Spend less time in the EHR so you can spend more time taking care of your patients, your family, and yourself.

Leave a Reply